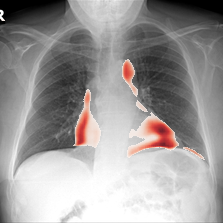

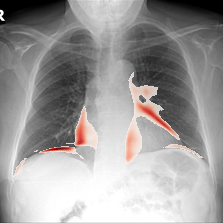

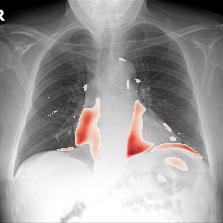

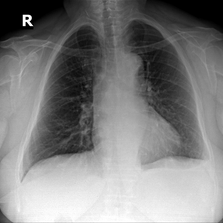

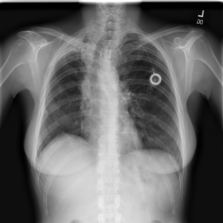

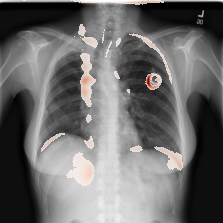

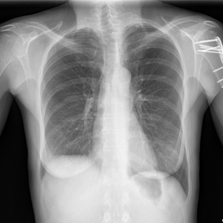

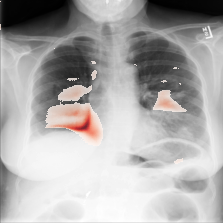

| Input Image |

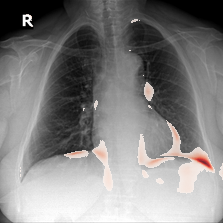

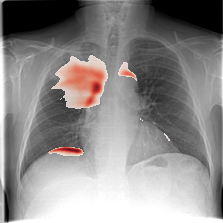

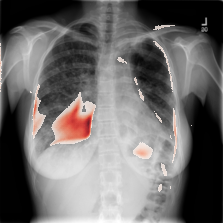

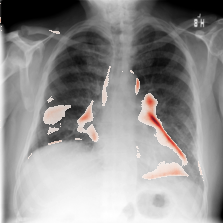

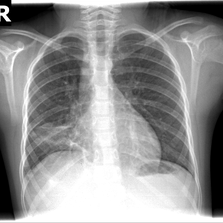

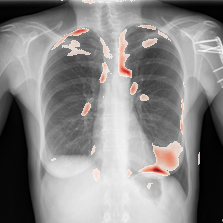

TorchXRayVision DenseNet121-all Trained on PadChest, NIH, CheXpert, and MIMIC-CXR datasets |

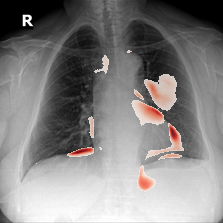

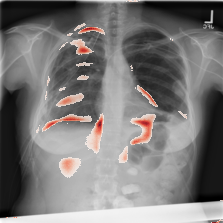

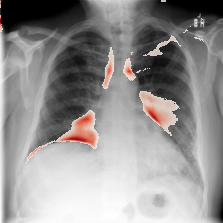

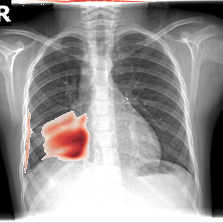

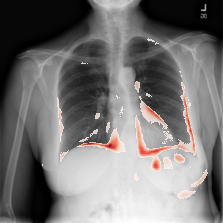

TorchXRayVision DenseNet121-mimic_ch Trained on the MIMIC-CXR dataset |

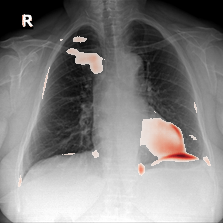

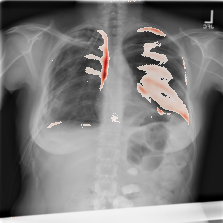

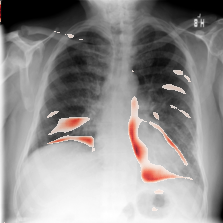

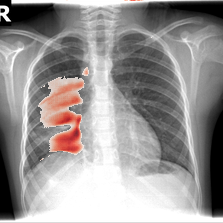

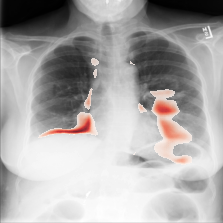

JF Healthcare DenseNet121 Trained on CheXpert data for the CheXpert challenge |

|||

| Latent Shift 2D | Latent Shift Gif | Latent Shift 2D | Latent Shift Gif | Latent Shift 2D | Latent Shift Gif | |

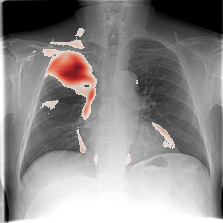

Prediction of Cardiomegaly

|

|

|

|

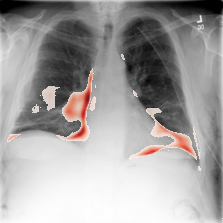

Prediction of Effusion

|

|

|

|

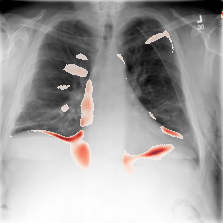

Prediction of Atelectasis

|

|

|

|

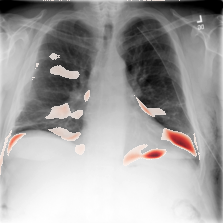

Prediction of Consolidation

|

|

|

|

Prediction of Mass

|

|

- | - | - | - |

Prediction of Pneumothorax

|

|

|

- | - |

Prediction of Infiltration

|

|

- | - | - | - |

Prediction of Edema

|

|

|

|

Prediction of Emphysema

|

|

- | - | - | - |

Prediction of Fibrosis

|

|

- | - | - | - |

Prediction of Pneumonia

|

|

|

- | - |

Prediction of Pleural_Thickening

|

|

- | - | - | - |

Prediction of Hernia

|

|

- | - | - | - |

Prediction of Lung Opacity

|

|

|

- | - |